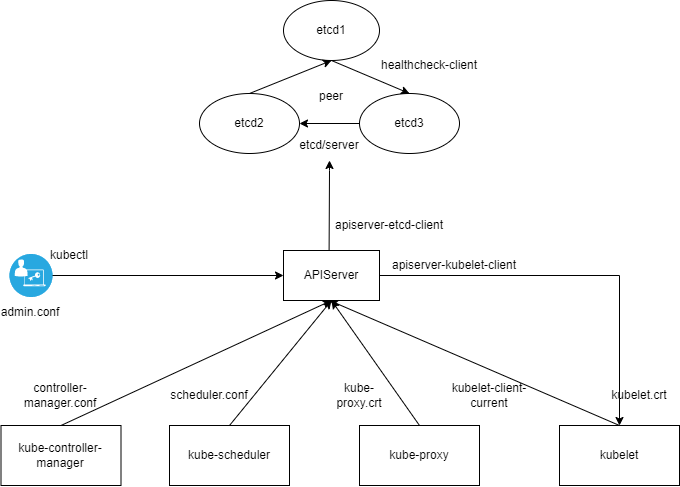

集群证书位置及作用

- /etc/kubernetes/pki/etcd/ca.crt:etcd CA证书

- /etc/kubernetes/pki/etcd/ca.key:etcd CA证书私钥

- /etc/kubernetes/pki/etcd/healthcheck-client.crt:etcd健康状态监测

- /etc/kubernetes/pki/etcd/healthcheck-client.key:私钥

- /etc/kubernetes/pki/etcd/peer.crt:etcd 节点间通讯使用(集群内部通讯端口 2380)

- /etc/kubernetes/pki/etcd/peer.key:私钥

- /etc/kubernetes/pki/etcd/server.crt:etcd 服务证书,对外提供服务

- /etc/kubernetes/pki/etcd/server.key:私钥

- /etc/kubernetes/pki/apiserver-etcd-client.crt:etcd 客户端证书(apiserver 使用证书与 etcd 通讯)

- /etc/kubernetes/pki/apiserver-etcd-client.key:私钥

ETCD集群证书逻辑拓扑

备份ETCD集群数据

备份有证书ETCD集群数据

首次备份,在master01节点将etcdctl工具从容器拷贝到master节点

# etcd containerid 可通过命令(docker ps | grep etcd)查询获得,拷贝成功后,授执行权限 chmod +x /usr/bin/etcdctl

docker cp <etcd containerid>:/usr/local/bin/etcdctl /usr/bin/

验证集群数据

# 进入容器

docker exec -it etcd1 sh

# 设置 etcdctl 为 v3 版本

export ETCDCTL_API=3

# 注:由于集群启用证书,需使用证书才能使用 etcdctl 命令查看集群数据,按自签证书名称,相关命令如下:

# 验证集群状态

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key member list

# 查看集群 leader 节点

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key -w table endpoint status --cluster

# 查看集群所有 key

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get --prefix --keys-only=true /

在 master01 节点执行备份数据命令

ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key snapshot save "snapshot-etcdcluster-$(date +%Y%m%d).db"

查看备份数据

ETCDCTL_API=3 etcdctl --write-out=table snapshot status "snapshot-etcdcluster-$(date +%Y%m%d).db"

备份无证书ETCD集群数据

首次备份,在服务器将 etcdctl 工具从容器拷到宿主机

# etcd containerid 可通过命令(docker ps | grep etcd)查询获得,拷贝成功后,授执行权限 chmod +x /usr/bin/etcdctl

docker cp <etcd containerid>:/usr/local/bin/etcdctl /usr/bin/

验证数据

# 进入容器

docker exec -it etcd sh

# 设置 etcdctl 为 v3 版本

export ETCDCTL_API=3

# 查看所有 key 数据

etcdctl get --prefix --keys-only=true /

注:由于 etcd 容器没有启用证书,进入容器后可直接使用 etcdctl 命令查看数据

# 手工写入数据

etcdctl put /learn data11

# 查看 key 数据

etcdctl get /learn

在服务器执行备份数据命令

ETCDCTL_API=3 etcdctl --endpoints=http://0.0.0.0:2379 snapshot save "snapshot-etcd-$(date +%Y%m%d).db"

查看备份数据

ETCDCTL_API=3 etcdctl --write-out=table snapshot status "snapshot-etcd-$(date +%Y%m%d).db"

恢复数据到单机ETCD容器

申请获得服务器资源,服务器已安装配置好 docker 服务

etcd:192.168.1.20

将备份数据 snapshot-etcd-20220723.db 上传到服务器/root 目录下

开放端口 如果执行命令提示防火墙没运行,请启动防火墙再执行命令

firewall-cmd --get-active-zones

firewall-cmd --list-port

firewall-cmd --zone=public --permanent --add-port=2379/tcp --add-port=2380/tcp

firewall-cmd --reload

firewall-cmd --list-port

恢复 etcd 数据步骤

启动 etcd 容器

docker run --restart=always --net host --name etcd -d \

-v /var/lib/etcd:/etcd-data \

-v /etc/localtime:/etc/localtime \

-e ETCDCTL_API=3 \

registry.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/usr/local/bin/etcd \

--data-dir=/etcd-data --name etcd \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=8589934592 \

--advertise-client-urls http://0.0.0.0:2379 \

--listen-client-urls http://0.0.0.0:2379

停止 etcd 容器

docker stop <containerid>

删除数据目录

rm -rf /var/lib/etcd

恢复数据

# 在 etcd1 宿主机执行恢复命令

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcd-20220723.db --data-dir=/var/lib/etcd

验证 etcd 数据

# 启动 etcd 容器

docker start etcd

# 确认 etcd 容器运行正常

docker ps

docker logs -f --tail=200 <containerid>

# 进入容器

docker exec -it etcd sh

# 设置 etcdctl 为 v3 版本

export ETCDCTL_API=3

etcdctl get --prefix --keys-only=true / # 查看所有 key

# 注:由于 etcd 容器没有启用证书,进入容器后可直接使用 etcdctl 命令查看数据

# 数据维护参考命令

etcdctl put /learn data11

etcdctl get /learn

etcdctl del /learn

恢复数据到无证书ETCD集群

申请获得服务器资源,每台服务器已安装配置好 docker 服务

- etcd1:192.168.1.10

- etcd2:192.168.1.11

- etcd3:192.168.1.12

将备份数据 snapshot-etcdcluster-20220723.db 上传到三台服务器/root 目录下

登录 etcd1、etcd2、etcd3 服务器开放端口

firewall-cmd --get-active-zones

firewall-cmd --list-port

firewall-cmd --zone=public --permanent --add-port=2379/tcp --add-port=2380/tcp

firewall-cmd --reload

firewall-cmd --list-port

恢复 etcd 集群数据步骤

恢复etcd1集群节点

启动 etcd1 容器

# 注:--initial-cluster-state 参数值配置为 new 或 existing 都可以,要求三个节点参数值一致

docker run --restart=always --net host -it --name etcd1 -d \

-v /var/lib/etcd:/etcd-data \

-v /etc/localtime:/etc/localtime \

-e ETCDCTL_API=3 \

registry.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/usr/local/bin/etcd \

--data-dir=/etcd-data --name etcd1 \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=8589934592 \

--listen-peer-urls=http://0.0.0.0:2380 \

--initial-advertise-peer-urls=http://192.168.1.10:2380 \

--advertise-client-urls=http://0.0.0.0:2379 \

--listen-client-urls=http://0.0.0.0:2379 \

--initial-cluster-state=existing \

--initial-cluster-token=etcd-cluster \

--initial-cluster="etcd1=http://192.168.1.10:2380,etcd2=http://192.168.1.11:2380,etcd3=http://192.168.1.12:2380"

停止 etcd1 容器

docker stop etcd1

删除数据目录

rm -rf /var/lib/etcd

恢复数据

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220723.db --data-dir=/var/lib/etcd --name etcd1 --initial-cluster etcd1=http://192.168.1.10:2380,etcd2=http://192.168.1.11:2380,etcd3=http://192.168.1.12:2380 --initial-cluster-token etcd-cluster --initial-advertise-peer-urls http://192.168.1.10:2380

恢复etcd2集群节点

启动 etcd2 容器

# 注:--initial-cluster-state 参数值配置为 new 或 existing 都可以,要求三个节点参数值一致

docker run --restart=always --net host -it --name etcd2 -d \

-v /var/lib/etcd:/etcd-data \

-v /etc/localtime:/etc/localtime \

-e ETCDCTL_API=3 \

registry.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/usr/local/bin/etcd \

--data-dir=/etcd-data --name etcd2 \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=8589934592 \

--listen-peer-urls=http://0.0.0.0:2380 \

--initial-advertise-peer-urls=http://192.168.1.11:2380 \

--advertise-client-urls=http://0.0.0.0:2379 \

--listen-client-urls=http://0.0.0.0:2379 \

--initial-cluster-state=existing \

--initial-cluster-token=etcd-cluster \

--initial-cluster="etcd1=http://192.168.1.10:2380,etcd2=http://192.168.1.11:2380,etcd3=http://192.168.1.12:2380"

停止etcd2容器

docker stop etcd2

删除数据目录

rm -rf /var/lib/etcd

恢复数据

# 在 etcd2 宿主机执行恢复命令

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220723.db --data-dir=/var/lib/etcd --name etcd2 --initial-cluster etcd1=http://192.168.1.10:2380,etcd2=http://192.168.1.11:2380,etcd3=http://192.168.1.12:2380 --initial-cluster-token etcd-cluster --initial-advertise-peer-urls http://192.168.1.11:2380

恢复etcd3集群节点

启动 etcd3 容器

# 注:-initial-cluster-state 参数值配置为 new 或 existing 都可以,要求三个节点参数值一致

docker run --restart=always --net host -it --name etcd3 -d \

-v /var/lib/etcd:/etcd-data \

-v /etc/localtime:/etc/localtime \

-e ETCDCTL_API=3 \

registry.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/usr/local/bin/etcd \

--data-dir=/etcd-data --name etcd3 \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=8589934592 \

--listen-peer-urls=http://0.0.0.0:2380 \

--initial-advertise-peer-urls=http://192.168.1.12:2380 \

--advertise-client-urls=http://0.0.0.0:2379 \

--listen-client-urls=http://0.0.0.0:2379 \

--initial-cluster-state=existing \

--initial-cluster-token=etcd-cluster \

--initial-cluster="etcd1=http://192.168.1.10:2380,etcd2=http://192.168.1.11:2380,etcd3=http://192.168.1.12:2380"

停止 etcd3 容器

docker stop etcd3

删除数据目录

rm -rf /var/lib/etcd

恢复数据

# 在 etcd3 宿主机执行恢复命令

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220723.db --data-dir=/var/lib/etcd --name etcd3 --initial-cluster etcd1=http://192.168.1.10:2380,etcd2=http://192.168.1.11:2380,etcd3=http://192.168.1.12:2380 --initial-cluster-token etcd-cluster --initial-advertise-peer-urls http://192.168.1.12:2380

验证etcd集群

# 同时启动三个节点 etcd 容器,执行以下命令

docker ps -a | grep etcd | cut -d' ' -f 1 | xargs -n 1 docker start

# 确认三个节点 etcd 容器运行正常

docker ps

docker logs -f --tail=200 <containerid>

# 进入 etcd1 容器

docker exec -it <containerid> sh

# 设置 etcdctl 为 v3 版本

export ETCDCTL_API=3

# 查看所有 key

# 注:由于集群没有启用证书,进入容器后可直接使用 etcdctl 命令查看集群数据

etcdctl get --prefix --keys-only=true /

# 查看集群

etcdctl member list

etcdctl endpoint health

etcdctl endpoint status

etcdctl -w table endpoint status --cluster

# 数据维护参考命令

etcdctl put /learn data22

etcdctl get /learn

etcdctl del /learn

恢复数据到有证书ETCD集群(手工签发新证书)

实操提示:如果新 etcd 集群有证书且节点 IP 有变更,可以按附录手工签发证书,如果故障 etcd 集群节点证书还可以使用,请沿用旧证书。

资源情况

每台服务器已安装配置好 docker 服务

- etcd1:192.168.1.10

- etcd2:192.168.1.11

- etcd3:192.168.1.12

三台服务器依次配置 hostname 与旧集群节点一致。如果已配置,则跳过

hostnamectl set-hostname master01

su

hostnamectl set-hostname master02

su

hostnamectl set-hostname master03

su

将备份数据 snapshot-etcdcluster-20220723.db 上传到三台服务器/root 目录下

登录 etcd1、etcd2、etcd3 服务器拉取镜像

docker pull registry.aliyuncs.com/google_containers/etcd:3.5.1-0

登录 etcd1、etcd2、etcd3 服务器开放端口

firewall-cmd --get-active-zones

firewall-cmd --list-port

firewall-cmd --zone=public --permanent --add-port=2379/tcp --add-port=2380/tcp

firewall-cmd --reload

firewall-cmd --list-port

恢复数据

恢复 etcd1 集群节点

启动 etcd1 容器

# 注:--initial-cluster-state 参数值配置为 new 或 existing 都可以,要求三个节点参数值一致

docker run --restart=always --net host -it --name etcd1 -d \

-v /data/etcd/certs:/certs \

-v /data/etcd/data/:/var/lib/etcd \

-v /etc/localtime:/etc/localtime \

-e ETCDCTL_API=3 \

registry.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/usr/local/bin/etcd \

--data-dir=/var/lib/etcd --name=etcd1 \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=8589934592 \

--listen-peer-urls=https://0.0.0.0:2380 \

--initial-advertise-peer-urls=https://192.168.1.10:2380 \

--advertise-client-urls=https://0.0.0.0:2379 \

--listen-client-urls=https://0.0.0.0:2379 \

--trusted-ca-file=/certs/etcd-ca.pem \

--auto-tls=true \

--cert-file=/certs/etcd-server.pem \

--key-file=/certs/etcd-server-key.pem \

--client-cert-auth=true \

--peer-trusted-ca-file=/certs/etcd-ca.pem \

--peer-auto-tls=true \

--peer-cert-file=/certs/peer.pem \

--peer-key-file=/certs/peer-key.pem \

--peer-client-cert-auth=true \

--initial-cluster-state=new \

--initial-cluster-token=etcd-cluster \

--initial-cluster="etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380"

停止 etcd1 容器

docker stop etcd1

删除数据目录

rm -rf /data/etcd/data/

恢复数据

# 在 etcd1 宿主机执行恢复命令

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220723.db \

--data-dir=/data/etcd/data/ \

--name etcd1 \

--initial-cluster etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380 \

--initial-advertise-peer-urls https://192.168.1.10:2380

恢复 etcd2 集群节点

启动 etcd2 容器

# 注:--initial-cluster-state 参数值配置为 new 或 existing 都可以,要求三个节点参数值一致

docker run --restart=always --net host -it --name etcd2 -d \

-v /data/etcd/certs:/certs \

-v /data/etcd/data/:/var/lib/etcd \

-v /etc/localtime:/etc/localtime \

-e ETCDCTL_API=3 \

registry.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/usr/local/bin/etcd \

--data-dir=/var/lib/etcd --name=etcd2 \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=8589934592 \

--listen-peer-urls=https://0.0.0.0:2380 \

--initial-advertise-peer-urls=https://192.168.1.11:2380 \

--advertise-client-urls=https://0.0.0.0:2379 \

--listen-client-urls=https://0.0.0.0:2379 \

--trusted-ca-file=/certs/etcd-ca.pem \

--auto-tls=true \

--cert-file=/certs/etcd-server.pem \

--key-file=/certs/etcd-server-key.pem \

--client-cert-auth=true \

--peer-trusted-ca-file=/certs/etcd-ca.pem \

--peer-auto-tls=true \

--peer-cert-file=/certs/peer.pem \

--peer-key-file=/certs/peer-key.pem \

--peer-client-cert-auth=true \

-initial-cluster-state=new \

-initial-cluster-token=etcd-cluster \

-initial-cluster="etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380"

停止 etcd2 容器

docker stop etcd2

删除数据目录

rm -rf /data/etcd/data/

恢复数据

# 在 etcd2 宿主机执行恢复命令

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220723.db \

--data-dir=/data/etcd/data/ \

--name etcd2 \

--initial-cluster etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380 \

--initial-cluster-token etcd-cluster \

--initial-advertise-peer-urls https://192.168.1.11:2380

恢复 etcd3 集群节点

启动etcd3容器

docker run --restart=always --net host -it --name etcd3 -d \

-v /data/etcd/certs:/certs \

-v /data/etcd/data/:/var/lib/etcd \

-v /etc/localtime:/etc/localtime \

-e ETCDCTL_API=3 \

registry.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/usr/local/bin/etcd \

--data-dir=/var/lib/etcd --name=etcd3 \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=8589934592 \

--listen-peer-urls=https://0.0.0.0:2380 \

--initial-advertise-peer-urls=https://192.168.1.12:2380 \

--advertise-client-urls=https://0.0.0.0:2379 \

--listen-client-urls=https://0.0.0.0:2379 \

--trusted-ca-file=/certs/etcd-ca.pem \

--auto-tls=true \

--cert-file=/certs/etcd-server.pem \

--key-file=/certs/etcd-server-key.pem \

--client-cert-auth=true \

--peer-trusted-ca-file=/certs/etcd-ca.pem \

--peer-auto-tls=true \

--peer-cert-file=/certs/peer.pem \

--peer-key-file=/certs/peer-key.pem \

--peer-client-cert-auth=true \

--initial-cluster-state=new \

--initial-cluster-token=etcd-cluster \

--initial-cluster="etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380"

停止etcd3容器

docker stop etcd3

删除数据目录

rm -rf /data/etcd/data/

恢复数据

# 在 etcd3 宿主机执行恢复命令

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220723.db \

--data-dir=/data/etcd/data/ \

--name etcd3 \

--initial-cluster etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380 \

--initial-cluster-token etcd-cluster \

--initial-advertise-peer-urls https://192.168.1.12:2380

验证 etcd 集群

同时启动三个节点 etcd 容器,执行以下命令

docker ps -a | grep etcd | cut -d' ' -f 1 | xargs -n 1 docker start

确认三个节点 etcd 容器运行正常

docker ps

docker logs -f --tail=200 <containerid>

进入容器

docker exec -it etcd1 sh

设置 etcdctl 为 v3 版本

export ETCDCTL_API=3

注:由于集群启用证书,需使用证书才能使用 etcdctl 命令查看集群数据,按自签证书名称,相关命令如

下:

# 验证集群状态

etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/data/etcd/certs/etcd-ca.pem \

--cert=/data/etcd/certs/etcd-server.pem \

--key=/data/etcd/certs/etcd-server-key.pem \

member list

etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/data/etcd/certs/etcd-ca.pem \

--cert=/data/etcd/certs/etcd-server.pem \

--key=/data/etcd/certs/etcd-server-key.pem \

endpoint health

etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/data/etcd/certs/etcd-ca.pem \

--cert=/data/etcd/certs/etcd-server.pem \

--key=/data/etcd/certs/etcd-server-key.pem \

endpoint status

# 查看集群所有 key

etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/data/etcd/certs/etcd-ca.pem \

--cert=/data/etcd/certs/etcd-server.pem \

--key=/data/etcd/certs/etcd-server-key.pem \

get --prefix --keys-only=true /

# 数据维护参考命令

etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/data/etcd/certs/etcd-ca.pem \

--cert=/data/etcd/certs/etcd-server.pem \

--key=/data/etcd/certs/etcd-server-key.pem \

put /learn dataTest33

etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/data/etcd/certs/etcd-ca.pem \

--cert=/data/etcd/certs/etcd-server.pem \

--key=/data/etcd/certs/etcd-server-key.pem \

get /learn

etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/data/etcd/certs/etcd-ca.pem \

--cert=/data/etcd/certs/etcd-server.pem \

--key=/data/etcd/certs/etcd-server-key.pem \

del /learn

恢复数据到有证书ETCD集群(沿用原集群证书)

环境情况

将备份数据 snapshot-etcdcluster-20220814.db 上传到三台服务器/root 目录下

登录 etcd1、etcd2、etcd3 服务器拉取镜像

docker pull registry.aliyuncs.com/google_containers/etcd:3.5.1-0

登录 etcd1、etcd2、etcd3 服务器开放端口

firewall-cmd --get-active-zones

firewall-cmd --list-port

firewall-cmd --zone=public --permanent --add-port=2379/tcp --add-port=2380/tcp

firewall-cmd --reload

firewall-cmd --list-port

恢复数据

拷贝 etcd 节点 etcd.yaml 文件到上一级目录

mv /etc/kubernetes/manifests/etcd.yaml /etc/kubernetes/

删除数据目录

rm -rf /var/lib/etcd/

每个节点完成上述命令执行后,在宿主机执行恢复命令

恢复 etcd1数据

# 在 etcd1 宿主机执行恢复命令

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220814.db \

--data-dir=/var/lib/etcd \

--name etcd1 \

--initial-cluster etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380 \

--initial-cluster-token etcd-cluster \

--initial-advertise-peer-urls https://192.168.1.10:2380

恢复etcd2数据

# 在 etcd2 宿主机执行恢复命令

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220814.db \

--data-dir=/var/lib/etcd \

--name etcd2 \

--initial-cluster etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380 \

--initial-cluster-token etcd-cluster \

--initial-advertise-peer-urls https://192.168.1.11:2380

恢复etcd3数据

ETCDCTL_API=3 etcdctl snapshot restore snapshot-etcdcluster-20220814.db \

--data-dir=/var/lib/etcd \

--name etcd3 \

--initial-cluster etcd1=https://192.168.1.10:2380,etcd2=https://192.168.1.11:2380,etcd3=https://192.168.1.12:2380 \

--initial-cluster-token etcd-cluster \

--initial-advertise-peer-urls https://192.168.1.12:2380

验证 etcd 集群

同时启动三个节点 etcd 容器,执行以下命令

cp /etc/kubernetes/etcd.yaml /etc/kubernetes/manifests/

将 etcd.yaml 拷到相应目录,由 kubelet 启动 etcd 容器 POD

确认三个节点 etcd 容器运行正常

kubectl get po -o wide -A

kubectl logs -f --tail=100 etcd-master01 -n kube-system

进入容器

docker exec -it etcd1 sh

设置 etcdctl 为 v3 版本

export ETCDCTL_API=3

注:由于集群启用证书,需使用证书才能使用 etcdctl 命令查看集群数据,相关命令如下:

# 验证集群状态

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key member list

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key endpoint health

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key endpoint status

# 查看集群所有 key

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get --prefix --keys-only=true /

# 数据维护参考命令

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key put /learn dataTest55

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get /learn

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key del /learn

手工签发 etcd 集群证书

下载证书签发工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

cp cfssl_linux-amd64 /usr/local/bin/cfssl

cp cfssljson_linux-amd64 /usr/local/bin/cfssljson

cp cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

签发etcd相关证书

登录 etcd1 服务器,创建目录

mkdir -p /data/etcd/{certs,data} /root/etcd/certjson/

cd /root/etcd/certjson/

上传文件 etcd-ca-config.json、etcd-ca-csr.json、etcd-server-csr.json、etcd-peer-csr.json 、etcd-client-csr.json 到目录/root/etcd/certjson/

签发 etcd CA 证书

cfssl gencert -initca /root/etcd/certjson/etcd-ca-csr.json | cfssljson -bare /data/etcd/certs/etcd-ca

校验 etcd CA 证书期限

openssl x509 -in etcd-ca.pem -text -noout | grep Not

签发 etcd server 证书

cfssl gencert -ca=/data/etcd/certs/etcd-ca.pem \

-ca-key=/data/etcd/certs/etcd-ca-key.pem \

-config=/root/etcd/certjson/etcd-ca-config.json -profile=kubernetes \

-hostname=127.0.0.1,master01,master02,master03,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.1.10,192.168.1.11,192.168.1.12 \

/root/etcd/certjson/etcd-server-csr.json | cfssljson -bare /data/etcd/certs/etcd-server

校验 etcd server 证书期限

openssl x509 -in etcd-server.pem -text -noout | grep Not

签发 etcd peer 证书

cfssl gencert -ca=/data/etcd/certs/etcd-ca.pem \

-ca-key=/data/etcd/certs/etcd-ca-key.pem \

-config=/root/etcd/certjson/etcd-ca-config.json -profile=kubernetes \

-hostname=127.0.0.1,master01,master02,master03,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.1.10,192.168.1.11,192.168.1.12 \

/root/etcd/certjson/etcd-peer-csr.json | cfssljson -bare /data/etcd/certs/peer

校验 etcd peer 证书期限

openssl x509 -in peer.pem -text -noout | grep Not

签发 etcd client 证书

cfssl gencert -ca=/data/etcd/certs/etcd-ca.pem \

-ca-key=/data/etcd/certs/etcd-ca-key.pem \

-config=/root/etcd/certjson/etcd-ca-config.json -profile=apiserver-etcd-client \

-hostname=127.0.0.1,master01,master02,master03,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.1.10,192.168.1.11,192.168.1.12 \

/root/etcd/certjson/etcd-client-csr.json | cfssljson -bare /data/etcd/certs/apiserver-etcd-client

校验 etcd client 证书期限

openssl x509 -in apiserver-etcd-client.pem -text -noout | grep Not

登录 etcd1、etcd2、etcd3 服务器,在 etcd2、etcd3 创建目录,将 etcd1 节点的证书拷贝到 etcd2、etcd3 节点

scp etcd-ca-key.pem etcd-ca.pem etcd-ca.csr etcd-server.csr etcd-server-key.pem etcd-server.pem peer.csr peer-key.pem peer.pem root@192.168.1.11:/data/etcd/certs/

scp etcd-ca-key.pem etcd-ca.pem etcd-ca.csr etcd-server.csr etcd-server-key.pem etcd-server.pem peer.csr peer-key.pem peer.pem root@192.168.1.12:/data/etcd/certs/